I was at a meeting recently with both the security and virtualization teams in the room and they were having trouble connecting security policies and objects that lived in each of their realms. A colleague of mine refers to this as the Rosetta Stone problem in which the security team is usually speaking a different language than the others. What is seemingly important to one team usually doesn’t resonate with the other. The two then become disconnected and one of the biggest advantages an IT team has, information sharing, can be completely lost.

So I came up with an analogy to try and help bridge the gap. Instead of looking at things in terms of IPS/IDS policy, firewall rules, vApp’s, or vDS’s, let’s think about attributes and behaviors of the one element that all teams share in common: the user. If we look at how, say medical insurance polices, are written, every trait about a person is considered and this is the core of what the policy is made up of and also how much it costs (it always comes down to dollars, right?). What if we did the same thing for security policies? If each group or piece of infrastructure that we are trying to secure could communicate back elements about a user, we could combine these all together so not only would we have a more comprehensive security policy, but we would also be speaking the same language.

In this model, security policies now become much more dynamic and rulesets that are active across devices are much more adaptive. You can move from having an environment-wide VDI policy for internal users to having a virtual machine whose policy and access level changes to fit each user as they login or logoff. This not only closes many of the gaps we have with current “Swiss cheese” firewall or security device policies, but it also locks down many communication paths that are most likely unprotected today to the most restrictive set.

I mentioned information sharing before and this is really where open standards and integration between all the security tools in an environment can play well together. The first advantage here is the ability to enforce consistent policy based on user identity across an entire infrastructure. These can be things like Active Directory Group, geographic location, login history, the nature of the access request, etc. All these ingredients can be combined together into something like a recipe that dictates what the security policy should be. For example, the security policy being enforced if I’m sitting in an office accessing servers in the datacenter or if I am connecting from an airport in a new country that I’ve never traveled to could be very different.

The other big advantage of this user-centric approach to security is the increased information flow between solutions. If you think of all the security controls in your environment as a chain of services instead of individual pieces, information about what actions have been taken or what user identity attributes are present can be passed along this chain. This now allows for a device down the line, say Device C, to make a decision or modify policy based on outcomes that have already been produced by Devices A and B. Not only can each control now be smarter by utilizing this additional information, but now you get a global view and enforcement of security policy that is making smarter decisions.

Now notice I didn’t mention any product names…that was on purpose. We’re still getting there within the ecosystem of solutions. Whether it’s open source tools, open API’s, or just vendors working together for these integrations, I hope that shifting our viewpoint from being more device-centric to the magnifying glass now being focused on the actual user will result in better solution collaboration and a wider adoption of newer security technologies. Additionally, if security teams are less isolated from being left out of the design process and also if their reputations can be a little less tarnished from all this, it wouldn’t hurt either 🙂

Brian has been an IT professional for over 10 years in various customer-facing consultancy and technical roles. He specializes in virtualization, networking, and security technologies and holds various industry certifications such as: VCAP5-DCA/DCD, VCP4/5, VCIX-NV, and CISSP. He has authored multiple courses on networking and security topics and is an active member in the industry communities. Brian was also nominated as a VMware vExpert for the past 4 years for his work within the VMware and partner communities. He currently works as a security and compliance specialist for the NSBU within VMware.

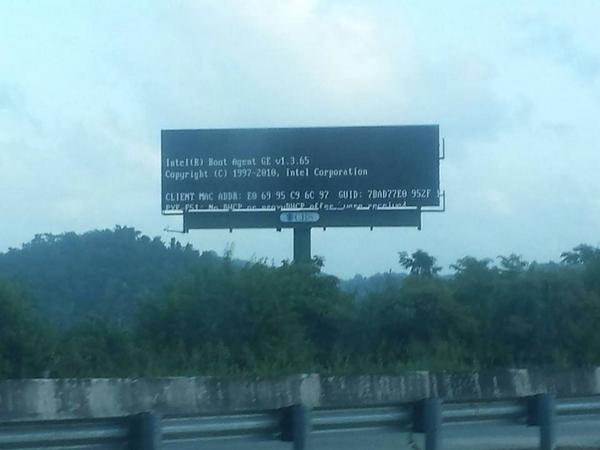

Digital bill boards are all the rage, state highway administrations and ad agencies have them all across the world. We see them telling us about the latest amber alert, or selling us cologne. We also see them with the blue screen of death or a boot error. Can these monstrosities at the bridge of the the digital and actual highways actually teach us anything about IT operations?

Digital bill boards are all the rage, state highway administrations and ad agencies have them all across the world. We see them telling us about the latest amber alert, or selling us cologne. We also see them with the blue screen of death or a boot error. Can these monstrosities at the bridge of the the digital and actual highways actually teach us anything about IT operations? are way less subtle. Down time is reflected directly back as lost revenue. Billboards sell ad time much like tv or radio sells commercial air time, with customers buying leases of weeks or months at a time. For the state highway boards the issue is less of revenue and more of public safety, with outages potentially causing drivers to get into accidents, or miss detours.

are way less subtle. Down time is reflected directly back as lost revenue. Billboards sell ad time much like tv or radio sells commercial air time, with customers buying leases of weeks or months at a time. For the state highway boards the issue is less of revenue and more of public safety, with outages potentially causing drivers to get into accidents, or miss detours.  Guess what this isn’t a huge jump from how IT is being transformed to better track IT expenses and map them to business needs. Business units are bringing SLOs to IT departments and counting on them to meet them along with the SLAs. If internal IT can’t meet those goals than business goes elsewhere. Smart IT leadership are mixing their capabilities with those of public cloud providers to ensure they are providing the best possible solutions for their customers.

Guess what this isn’t a huge jump from how IT is being transformed to better track IT expenses and map them to business needs. Business units are bringing SLOs to IT departments and counting on them to meet them along with the SLAs. If internal IT can’t meet those goals than business goes elsewhere. Smart IT leadership are mixing their capabilities with those of public cloud providers to ensure they are providing the best possible solutions for their customers.  hilarious. I am not sure I can get into security again in this post, VDM30in30 may be killing my willingness to argue for security first architectures.

hilarious. I am not sure I can get into security again in this post, VDM30in30 may be killing my willingness to argue for security first architectures.  That’s pretty restrictive, when in reality cloud native apps are much more like walking down a Vegas buffet line. You can find just about everything on that table, none of it may be as good as you could get in each cuisines niche restaurant but it’s all serviceable. Cloud native apps are about mixing capabilities and leveraging existing external services to accomplish complex tasks.

That’s pretty restrictive, when in reality cloud native apps are much more like walking down a Vegas buffet line. You can find just about everything on that table, none of it may be as good as you could get in each cuisines niche restaurant but it’s all serviceable. Cloud native apps are about mixing capabilities and leveraging existing external services to accomplish complex tasks. as like that weird commercial on TV where the people have their mine blown and purple smoke pours out. Because doesn’t the mixing of monolith and micro make strange bed-fellows or at the very least circus side show freaks. No it turns out that it actually makes a lot of sense. Next time I go to the bowling alley I am going to ask for bowling flip-flops and use a duck-pin puck instead of a ball. You can’t tell me how to do this Mr. Bowling Alley Operator, after all what are you going to do spray me with the shoe disinfectant?

as like that weird commercial on TV where the people have their mine blown and purple smoke pours out. Because doesn’t the mixing of monolith and micro make strange bed-fellows or at the very least circus side show freaks. No it turns out that it actually makes a lot of sense. Next time I go to the bowling alley I am going to ask for bowling flip-flops and use a duck-pin puck instead of a ball. You can’t tell me how to do this Mr. Bowling Alley Operator, after all what are you going to do spray me with the shoe disinfectant?